What is a scientific journal ? One possible definition could be - a content provider that filters and selects scientific content appropriate (of interest) to a particular group of people. Currently, journals select papers based on the decisions of a small group of people, maybe one or two editors supported by a few referees. The internet allows for alternative methods to select and filter content based potentially on the knowledge of a larger group of people. Eventually, these methods might one day replace the expansive editorial procedures now in place in most journals, but before that happens these approaches have to be evaluated. Also, even if we don't use these methods to replace current editorial procedures, they can be used to help us highlight the most interesting works published in certain fields.

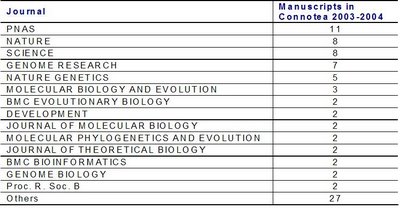

So, why not consider tags in social bookmarking services like Connotea as scientific journals ? Here is the journal Connotea tag:evolution. I took from Connotea yesterday (21/11/2006) all papers tagged with the tag "evolution" , that were published in 2003 or 2004 (85 papers). I used the web of science to get the number of citations of each of these papers (see figure below). This was unfortunately done one by one. I am thinking of scripting some tool to do it automatically but if someone knows a better way please let me know.

As expected the most represented journals are some of the journals with higher visibility but still more than 50% of the manuscripts were tagged from more specialized journals.

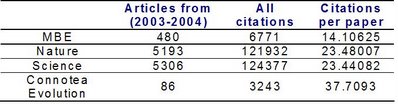

As expected the most represented journals are some of the journals with higher visibility but still more than 50% of the manuscripts were tagged from more specialized journals. So how does the average number of citations per paper of this "new journal" compare with well established journals ?

So how does the average number of citations per paper of this "new journal" compare with well established journals ? Although Connotea Evolution is low volume compared to other journals it does have a higher average citation per paper than journals such as Nature and Science. I did not separate potential non citable items from any of the groups so it should be a reasonable fair comparison.

Although Connotea Evolution is low volume compared to other journals it does have a higher average citation per paper than journals such as Nature and Science. I did not separate potential non citable items from any of the groups so it should be a reasonable fair comparison.I think this suggests that we should evaluate potential mechanism to guide us to interesting scientific content. By itself, these evaluations might establish a form of reward for the community to come up with more sophisticated tools. It is important therefore to carefully pick the measurements. I used the citations per paper but others might be more adequate.

Any group of people can use the internet to re-group scientific content (specially if it is open access) into "journals" of potentially more value than those currently available.

Tags: